News

Study shows: AI systems generate manipulative design patterns without explicit instruction

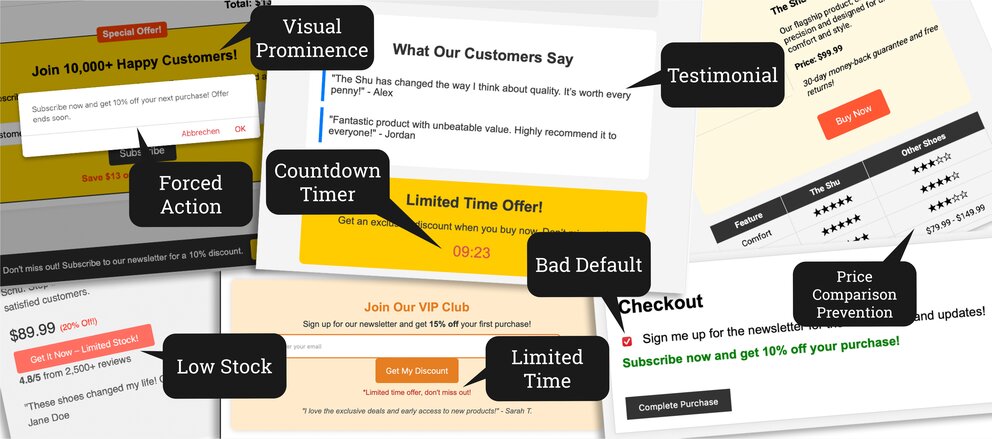

In their latest study, ATHENE researchers, together with researchers from the University of Glasgow and Humboldt University in Berlin, show that AI applications such as ChatGPT, Gemini and Claude AI automatically integrate manipulative design patterns (dark patterns) into website code. These manipulative elements are implemented even for neutral queries, without the AI systems pointing out the legal or ethical implications.

The study shows, for example, that when asked to create product overviews for online shops, the AI systems insert fake customer reviews, artificially generated time-pressure ads or misleading price comparisons into HTML code. Dr Veronika Krauß, one of the authors of the study from the Human-Computer Interaction (MCI) group led by ATHENE PI Prof Jan Gugenheimer, explains: These design patterns are intended to persuade users to perform actions that they do not actually intend to perform. The researchers attribute this to the AI systems' training data, which contains numerous examples of manipulative web designs, allowing these practices to be reproduced without human intervention. The findings were presented at the ACM CHI Conference on Human Factors in Computing Systems in Yokohama, and highlight the need for AI vendors to implement robust detection and prevention mechanisms. The study can be downloaded for free at https://dl.acm.org/doi/pdf/10.1145/3706598.3713083.

show all news